What is Apache Spark?

Apache Spark is an open-source, dis. tributed processing system used for big data workloads. It utilizes in-memory caching, and optimized query execution for fast analytic queries against data of any size. It provides development APIs in Java, Scala, Python and R, and supports code reuse across multiple workloads—batch processing, interactive queries, real-time analytics, machine learning, and graph processing. You’ll find it used by organizations from any industry, including at FINRA, Yelp, Zillow, DataXu, Urban Institute, and CrowdStrike. Apache Spark has become one of the most popular big data distributed processing framework with 365,000 meetup members in 2017.

Spark was discovered by apache software foundation to speed up the Hadoop computational process and overcoming its limitations. But spark is not a modified version of Hadoop. Spark has its own cluster management whereas Hadoop is one of ways to implement spark.

Apache spark is an exceptionally a cluster computing technology developed for fast computation, it depends on Hadoop map Reduce to utilize all kinds of computations. To execute spark applications faster the apache spark is used as tool. Where spark is utilizes hadoop in two ways because it has its own cluster computing management.

- Storage

- Process handling

Spark is one of the Hadoops sub venture developed by Matei Zaharia in the year 2009 at UC Berkeley’s AMPLAB. Spark was open sourced in 2010 under a BSD license and given to apache in 2013 now it is apache spark.

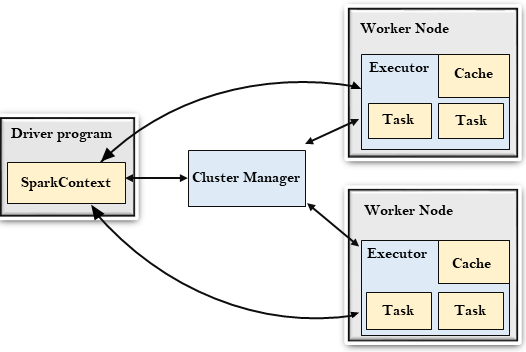

Apache Spark architecture

At a fundamental level, an Apache Spark application consists of two main components: a driver, which converts the user’s code into multiple tasks that can be distributed across worker nodes, and executors, which run on those nodes and execute the tasks assigned to them. Some form of cluster manager is necessary to mediate between the two.

Out of the box, Spark can run in a standalone cluster mode that simply requires the Apache Spark framework and a JVM on each machine in your cluster. However, it’s more likely you’ll want to take advantage of a more robust resource or cluster management system to take care of allocating workers on demand for you. In the enterprise, this will normally mean running on Hadoop YARN (this is how the Cloudera and Hortonworks distributions run Spark jobs), but Apache Spark can also run on Apache Mesos, Kubernetes, and Docker Swarm.

If you seek a managed solution, then Apache Spark can be found as part of Amazon EMR, Google Cloud Dataproc, and Microsoft Azure HDInsight. Databricks, the company that employs the founders of Apache Spark, also offers the Databricks Unified Analytics Platform, which is a comprehensive managed service that offers Apache Spark clusters, streaming support, integrated web-based notebook development, and optimized cloud I/O performance over a standard Apache Spark distribution.

Apache Spark builds the user’s data processing commands into a Directed Acyclic Graph, or DAG. The DAG is Apache Spark’s scheduling layer; it determines what tasks are executed on what nodes and in what sequence.

Spark libraries

The Spark Core engine functions partly as an application programming interface (API) layer and underpins a set of related tools for managing and analyzing data. Aside from the Spark Core processing engine, the Apache Spark API environment comes packaged with some libraries of code for use in data analytics applications. These libraries include the following:

- Spark SQL — One of the most commonly used libraries, Spark SQL enables users to query data stored in disparate applications using the common SQL language.

- Spark Streaming — This library enables users to build applications that analyze and present data in real time.

- MLlib — A library of machine learning code that enables users to apply advanced statistical operations to data in their Spark cluster and to build applications around these analyses.

- GraphX — A built-in library of algorithms for graph-parallel computation.

Features of Apache Spark

Apache Spark has following features.

- Speed − Spark helps to run an application in Hadoop cluster, up to 100 times faster in memory, and 10 times faster when running on disk. This is possible by reducing number of read/write operations to disk. It stores the intermediate processing data in memory.

- Supports multiple languages − Spark provides built-in APIs in Java, Scala, or Python. Therefore, you can write applications in different languages. Spark comes up with 80 high-level operators for interactive querying.

- Advanced Analytics − Spark not only supports ‘Map’ and ‘reduce’. It also supports SQL queries, Streaming data, Machine learning (ML), and Graph algorithms.

Benefits of Apache Spark

Speed

Engineered from the bottom-up for performance, Spark can be 100x faster than Hadoop for large scale data processing by exploiting in memory computing and other optimizations. Spark is also fast when data is stored on disk, and currently holds the world record for large-scale on-disk sorting.

Ease of Use

Spark has easy-to-use APIs for operating on large datasets. This includes a collection of over 100 operators for transforming data and familiar data frame APIs for manipulating semi-structured data.

A Unified Engine

Spark comes packaged with higher-level libraries, including support for SQL queries, streaming data, machine learning and graph processing. These standard libraries increase developer productivity and can be seamlessly combined to create complex workflows.

Conclusion

To sum up, Spark helps to simplify the challenging and computationally intensive task of processing high volumes of real-time or archived data, both structured and unstructured, seamlessly integrating relevant complex capabilities such as machine learning and graph algorithms. Spark brings Big Data processing to the masses. Check it out!

#Embedded Courses in Bangalore #Embedded Courses in Hyderabad #Embedded Jobs for Freshers #Embedded C #Embedded Systems #Embedded Training in Hyderabad #Embedded Classes in Hyderabad #Embedded Systems Course in Hyderabad #Embedded Programming Courses in Hyderabad #Embedded Training in Bangalore #Embedded Classes in Bangalore #Embedded Systems Course in Bangalore #Embedded Programming Courses in Bangalore #Best Embedded Training Institute in Bangalore #Best Embedded Training Institute in Hyderabad #Embedded Training Institute in Bangalore #Embedded Training Institute in Hyderabad #Best Embedded Course in Bangalore #Best Embedded Course in Hyderabad #best iot training institute in Bangalore #best iot training institute in Hyderabad #iot training institute in bangalore #iot training institute in Hyderabad #best iot course in Bangalore #best iot course in hyderabad #Embedded Jobs in Bangalore #Embedded Jobs in Hyderabad #Iot jobs in Bangalore #IoT jobs in Hyderabad #Python Jobs in Bangalore #Python Jobs in Hyderabad #AIML #Artificial Intelligence #machine learning #Data Science Courses in Bangalore #Data Science Courses in Hyderabad #Data Science Courses #Best Data Science Training Institute #Best Data science Course #Best Python Course #Best Iot Course #Best Iot Training Institute in Bangalore #Best Iot Training Institute in Hyderabad #Iot Certification Course Bangalore #Iot Certification Course Hyderabad #Best Artificial Intelligence Training Institute Bangalore #Best Artificial Intelligence Training Institute Hyderabad #Best fullstack Training Institute in Bangalore #Best full stack Training Institute in Hyderabad #full stack Training Institute in Bangalore #full stack Training Institute in Hyderabad #Best full stack Course in Bangalore #Best full stack Course in Hyderabad

Author: Prithvi. [MBA in Digital marketing and Ecommerce]